There is a global push towards making AI more ethical and transparent. As critical contributions on the topic of AI have pointed out: even when computational applications are considered self-learning, there are always humans involved whenever they come into use (see e.g. Gray and Suri, 2019), often providing invisibilised labour from the global South (Irani, 2015.; Moreschi et al., 2020). In the wider public discourse on AI, this is often reduced to the phrase ‘human in the loop’. A recent experience led me to ponder on the relationship between technology and this ‘human-in-the-loop’ beyond the debates I had been following. More specifically, it made me reflect on something I think of as the capacity to ‘expertly improvise’.

Even when computational applications are considered self-learning, there are always humans involved whenever they come into use, often providing invisibilised labour from the global South.

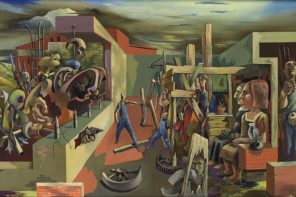

Let me explain. Not long ago, my mother went for a breast examination because of a visual irregularity. Her gynaecologist of many years, whom I call Dr Schäfer here, had decided to refer her for Magnetic Resonance Imaging (MRI), a technology that produces three-dimensional and very detailed images of organs and structures inside the body. This was an unusual response since the mammogram, a low-energy X-ray examination commonly used for breast cancer screening, had not flagged anything unusual. Nor had the ultrasound that was done subsequently on her breast using high-frequency soundwaves to create an image of what lies underneath the skin. Given that there had not been any tangible findings with the previous two diagnostic technologies, the doctor who was now doing her MRI expressed surprise that my mum had been sent to him and that Dr Schäfer had gone through the trouble of making sure her insurance covered the procedure.

The reason that Dr Schäfer had acted was her familiarity with my mum’s history of breast cancer and the rest of her medical record. While her intuition and initiative did not produce immediate clarity, it proved to be warranted. A third instrumental intervention, the MRI pictures with sensitivity to soft tissue parts of the body that can be hard to see in other imaging tests, retrospectively justified her inquisitive response and showed that there was indeed something. The next step was to do a needle biopsy to sample that something and determine the presence of cancerous cells. It would thereby also determine the course of my mum’s next stretch of life, who had been counting down the days towards the freedom of retirement.

There was another problem, though. The surgeon now had to guess where the biopsy had to precisely be taken, because the ultrasound that would have guided him to locate the something identified by The MRI was still not picking anything up. He resolved to take five different samples instead of just one to increase the likelihood of penetrating what the MRI had contoured. For my mother, this meant both an excessively tender and swollen breast for a more than a week and uncertainty in terms of whether a negative result would actually be that – a negative result. It could just as well be a mere swing-and-miss extracting neighbouring healthy tissue as opposed to what might be invasive, unhealthy material.

Due to my current research interest in technologies and trust, I found myself surrounded by books on the social aspects of Artificial Intelligence. My scepticism towards the routine application of algorithmic solutions had only increased the more I engaged with the topic on a theoretical basis. While the sources I learnt from were engaging and shaped my way of thinking, they often still felt removed from my own experiential realm. At some point began wondering whether an algorithm could not have guided the surgeon based on the coordinates from the MRI. Despite my cultivated apprehension of AI solutions, I would have very much welcomed such a calculated interference in the form of a precise technology at this point. The prospects of predictability and encryption suddenly appealed to me much more than they had just reading about them. Perhaps also because the examples that are typically referenced as facilitators of questionable social practice are often automated processes with very little human input and capacity for divergence/thinking outside an algorithm-like set of rules.

AI in its current forms is known to have limited abilities; it cannot do a range of tasks but rather specific ones very precisely and fast – given the weeks that had already passed waiting for medical appointments to become available, this seemed enticing. At the same time, if automated image analysis based on algorithms and the mammogram had been the sole ground for decision-making (instead of giving weight to the acquired expertise of Dr Schäfer), this would have meant an immediate dismissal of the entire situation because earlier imaging had been negative. The visual symptom at the top layer of my mum’s body would have been bypassed because it did not align with the image material produced of the inner life of her body. Consequently, she would never have had the MRI to begin with. The language spoken through the use of medical technologies could have easily drowned out my mother’s (and by extension a lot of other peoples’) concerns.

I came to think of improvisation as a vital skill and framework that could be really useful when evaluating ways in which technologies become integrated into systems.

Improvisation as an idea is frowned upon – in medicine especially – and even seen as unprofessional. Despite the institutionalisation of checklists as the normative gold standard, there are many situations and contexts in which professionals deviate from them (Timmermans and Epstein, 2010). I came to think of improvisation as a vital skill and framework that could be really useful when evaluating ways in which technologies become integrated into systems. It helps rendering communication humane and stepping away from a categorically clear-cut way of processing information. What is more, thinking of improvisation as something that is grounded in expertise and not necessarily something that happens ad hoc (Bertram and Rüsenberg, 2021) also means giving more weight to the unique communicative and empathetic capacities of the ’human in the loop’ within care systems that pull together various highly specialised competencies.

What really sat with me at the end of the day was that there seemed to be a dearth of answerability in each of the diagnostic stops entailing both human and technological intervention (apart from Dr Schäfer, with whom my mom had built a connection). In fact, there was a carelessness with which my mother was left waiting for results and was forced to make multiple follow-up phone calls. Uncertainty is part and parcel of these kinds of life junctures. And the segregation of a check-list expertise from experiential processes of coming to a diagnosis is a general problem. However, taking technologies and their analytic and communicative capacities for granted as part of a process might make doctor-patient exchanges, already critiqued for becoming increasingly routinised, ever more bereft of the relevant linkages that form part of a careful communication. Accountability, or a lack thereof, has been problematised for AI implementations in recent years. But that is not so much the case for older and more established tools that have become very much built into healthcare processes. With the ultrasound not showing the area of concern, the biopsy was taking place in a manner the doctor referred to as ‘randomly shooting into a dark forest in order to kill one little deer.’ Instead of suggesting another approach, the surgeon sent my mother home to wait for a result that would not be one unless it flagged cancer.

It was not just Dr Schäfer’s proximity to my mother and her ability to empathise with the situation that enabled her to offer care in the most fundamental sense of the word. By that, I mean more than sterile, elusive medical phrases and an acknowledgement of the torment of the ongoing state of uncertainty. It was her willingness to move away from the script: to improvise. And her resistance to being part of and routine/automated process of care involving a myriad of technologies and specialisations. The lack of precision was not predominantly a problem of the equipment that was being used. Ultimately, when the first, more random biopsy failed to produce any usable results, a way was found to take an accurate sample by inserting a wire into my mum’s breast during an MRI – a very painful but ultimately worthwhile process. It was rather the feedback-loop between doctors and patient that illustrated the more relevant dearth of clarity and a profound risk of disconnect between lived realities and formularised medical practice. Creating this feedback loop becomes even harder the less funds are available for healthcare, forcing medical partitioners into a machine-like role in processing their patients.

With AI being perhaps in some cases self-learning but not capable to improvise and invent algorithmic dictions anew, feedback and translation into the right kind of language seems central in making human-centred decisions with technological assistance.

Cybernetics is a term often used in the context of automation and regulation. Margaret Mead summarised the then state of the art understanding of the field as “a way of looking at things and as a language for expressing what one sees” (1968, p:2). This includes an emphasis on ‘feed-back’ and making things understandable outside of disciplinary specialisations. With AI being perhaps in some cases self-learning but not (yet?) capable to improvise and invent algorithmic dictions anew, feedback and translation into the right kind of language seems central in making human-centred decisions with technological assistance. Building on a language that does not insist on zero-sum diagnostics — and I would imagine most of those concerned with the topic of automation would agree — is indeed essential when machines and their algorithms form part of care relationships. Especially so when communication happens across specialisations. Part of this language or repertoire, I believe, is the ability to act intuitively and to expertly improvise.

Algorithms can be described as socio-technical assemblages, pointing at the relationality between humans and codes. There have been numerous calls to pay attention to these interactions, and analysis often focuses on relationships with information and understandings of what is real and desirable more broadly speaking. As of yet, there is still little insight into what that means for individual experiences. Where technologies form part of biomedical care and the language spoken between patient and doctors (often in the plural with different specialisations and their specific parlance), there are different versions or contextualisation of reality being processed. For the surgeon, there was no next logical step to follow after the unusual event of technological unresponsiveness. This was simply not part of his operational checklist or algorithm. Instead of communicating this to my mum, he explained that what the MRI had flagged could be ‘anything’ – without any explanation as to what this semantically empty word might entail.

The technological inventions that were part of the entire medical process my mum had to undergo to find answers are pretty much taken for granted and not considered AI. The term ‘MRI’, for instance, rings a bell for many as a singular medical prognostic approach or as an uncomfortable experience of lying very still inside a large tube. Rarely are these machines explained in their precise functionality or as part of a larger care process. Yet, it makes sense to consider the dialectic human-machine relationship and render its tensions more remarkable. Technologies may be precise, but they cannot communicate – or at least not in a way that humans will find satisfactory (everyone who has ever bothered with a chat-bot on a website or with voice recognition when not having a ‘standard accent’ will agree). Often, technologies are not even fully understood by those who use them, or by those who are advised to make use of them. They also cannot explain what an occasional glitch in their enviable precision means for individuals’ realities – let alone suggest alternative routes to their calculated logic. Broadly speaking, few would want machines to single-handedly make important decisions, unless one would be the one to make a financial profit from it. The wealth of human experience, the ability to expertly improvise while weighing different scenarios, cannot be imitated and digitally outsourced.

Image of an MRI machine by liz west, courtesy of Flickr.com.

References:

Bertram G. and Rüsenberg, M., 2021. Improvisieren! Lob der Ungewissheit: Was bedeutet das alles? Leipzig: Reclam Books.

Gray, M.L. and Suri, S., 2019. Ghost work: How to stop Silicon Valley from building a new global underclass. New York: Eamon Dolan Books.

Irani, L., 2015. Difference and dependence among digital workers: The case of Amazon Mechanical Turk. South Atlantic Quarterly, 114(1), pp.225-234.

Mead, M., 1968. Cybernetics of cybernetics. Purposive systems: Proceedings of the First Annual Symposium of the American Society for Cybernetics. New York: Spartan Books.

Moreschi, B., Pereira, G. and Cozman, F.G., 2020. The Brazilian Workers in Amazon Mechanical Turk: dreams and realities of ghost workers. Contracampo, 39(1).

Timmermans, S. and Epstein, S., 2010. A world of standards but not a standard world: Toward a sociology of standards and standardization. Annual review of Sociology, 36, pp.69-89.

Featured image by Mike MacKenzie, courtesy of Flickr.com.